Physically based rendering (PBR) is a collection of rendering techniques in computer graphics that seeks to render graphics in a way that more accurately models the flow of light in the real world.

This was one of the topics I was most excited to work on, and as it turned out, also one of the harder ones, which made completing it even more enjoyable.

This topic not only helped me explore the various rendering techniques required to execute PBR but also how some of the DirectX 11 resources and sub-resources work, which I felt was very valuable as it would help me in my future projects.

PBR Theory

PBR rendering techniques are based on the microfacet theory, which says that the surface of each object at a microscopic level can be shown as tiny reflective mirrors called microfacets, the alignment of these mirrors is varied depending u on the roughness of the objects. Based on the roughness of a surface we can calculate the ratio of microfacets roughly aligned to the halfway vector. The more the microfacets are aligned to the halfway vector, the sharper and stronger the specular reflection.

This theory also takes into account energy conservation, some light is reflected and the rest is reflected. The light that re-emerges after absorbing is the diffuse color and the light that directly reflects from the surface is the specular light, the light that is absorbed is handled by a rendering technique called sub-surface scattering.

All this builds up to what is called the reflectance equation or the rendering equation which is used to simulate the visuals of light.

The Bidirectional Reflective Distribution Function (BRDF) is a function that takes as input the incoming light direction, the outgoing view direction, the surface normal and a surface parameter that represents the microsurface’s roughness. The BRDF approximates how much each individual light ray contributes to the final reflected light of an opaque surface given its material properties. BRDF approximates the materials reflective and refractive properties based on the microfacet theory. Here we are using the Cook-Torrance BRDF.

This was a very brief overview of the theory, for a deeper dive into it, I highly suggest this page. Another thing to be noted here is that this is not a “Just gloss over” kind of a topic, I’d highly recommend to sit down with pen and paper and make notes for yourself as I can guarantee it that if you are interested in this, it will require multiple reads and something to compile all your thoughts would serve well.

Putting the above theory into code results in something that already looks better than the blinn-phong model, but since we are working in PBR model it also requires us to convert our result into High Dynamic Range (HDR) and also applying some gamma correction as the specular highlights would too bright.

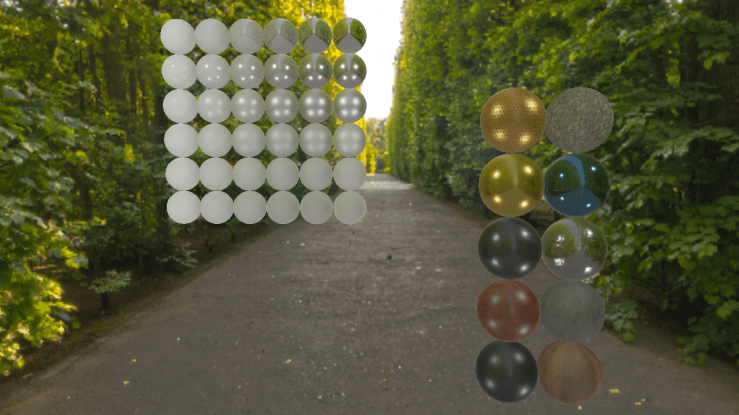

We do all this and feel pretty good about, looking good !!

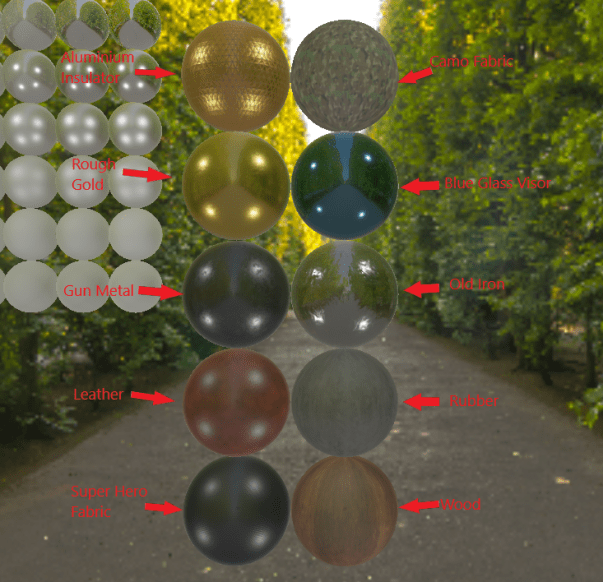

Well, this is only halfway done, everything above was technically pretty straightforward and easy. The next part of the process is called Image Based Lighting (IBL) which takes into account the environment of our scene and how it reflects on our objects, this is the thing that provides the real ‘Juice’ to the PBR model.

The first part of IBL is diffuse irradiance, which adds to the diffuse lighting of the object.

This process requires us to take the environment cube map and convoluting it to get the result that we can apply onto the objects to add to the diffuse lighting of the object.

Convolution is applying some computation to each data entry in a data set considering all other entries in the data set. The dataset here is the environment cube map. So, for every sample direction in the cube map, we take every other sample direction over the hemisphere into account.

Now, there are a lot of software’s available to just process a convoluted cube map if you just provide your environment cube map as the input, which can just be sampled very easily in your shader. But, I highly recommend that you compute your own convoluted environment map in DirectX itself as a pre-process before the main rendering loop (Please do not, I mean DO NOT put it in your main rendering loop, unless you are a bitcoin farmer with a stack of GPU’s just laying around, and even then for the sake of efficiency just pre-compute it).

Another reason to do your own convolution is that it forms a good base for the next step as there is no cop-out software available that at least I could find.

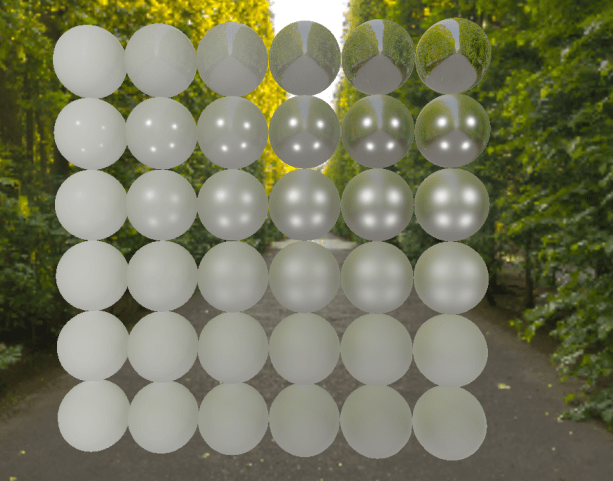

The second step in IBL is the Specular IBL, which means pre-filtering our environment map. This involves creating mip-maps of the environment map and convoluting the mip maps using split sum approximation, making them blurrier for each roughness level we convolute.

Creating mip-maps is easy, but computing on the mip-maps was the trickier part. This led me to researching how the DirectX 11 sub-resources worked and successfully creating the pre-filtered environment mip-maps.

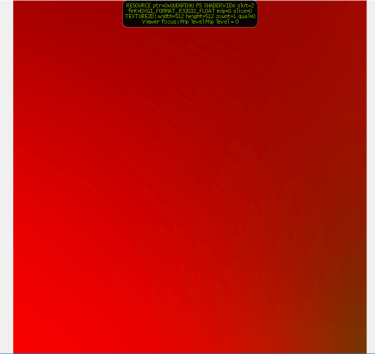

After this, we need to compute the BRDF lookup texture which also known as BRDF integration map.

Combining everything above results in the PBR model.

Github : https://github.com/TheEvilBanana/PhysicallyBasedRendering

References:

Now the mainstream engine’s approach is to use image-based lighting to achieve approximate global illumination, but such a technique seems to be only applicable to outdoor scenes.What kind of technology is used in the indoor scene to achieve global illumination?For example, in a room with natural light, or in a completely enclosed indoor environment.

LikeLike